“Digital” is not a noun

Lucas Hilderbrand / University of California, Irvine

For this column, I offer a polemic that might alternately be called “semiotics of the digital,” a cranky reflection on the ways we talk about digital technologies and the meanings that underlie seemingly benign grammatical usage. (This is what happens when there’s nothing good to watch on TV.)

Noun

“Digital” is not a noun.1 It’s an adjective that modifies a technology, an image, a culture, an “age,” or the like. “The digital” is not a thing in itself; it merely points to an underlying structure of binary code that manages information without any medium specificity or essence beyond a string of 1s and 0s. “The digital” does not exist. And yet “the digital” is often spoken of and even occasionally theorized as a self-evident category—one whose vagueness hedges against rapid obsolescence or implies an entire world changed by computation and new delivery platforms.2 We all know more or less what is meant by “the digital”: a mythical technological utopia that has so often been hailed and believed in. When used as a noun, what does it name? It names an abstraction, an ideology, an emperor with no clothes.

Additionally, there is no such thing as a recognizable or stable digital aesthetic. “The digital” has not ushered “the end of cinema” as was feared at the turn of the millennium, but rather it has opened up a broader conception of the cinema as related to moving images, screens, and interfaces including but extending beyond celluloid.3 “The digital” extends to so many devices that claims to a singular aesthetic are difficult to justify. Email, electronic thermometers, DVDs, and microwave timers are all “digital” technologies, yet we do not experience them in the same way. As others have pointed out before me, we do not perceive their underlying codes and computational commands; we perceive their graphical interfaces and control buttons.

As more and more of our communications and art become digitized, the mere fact of digitality comes to matter less and less. When it comes to “the digital” there’s no there there. What we experience is a variety of interfaces and storage formats—not “the digital.” Rather than speaking in terms of “the digital” we should think in more precise ways that recognize the multiplicities of digital technology. Conceiving “the digital” as a noun—as a subject, an object, a thing—perhaps misleads us into conceiving of electronic technologies and media as something unified, something with ontology. We lose the complexity and specificity of “the digital” by reducing it to a generalizing noun.

Adjective

“Digital,” in its most accurate usage, is a neutral modifier pointing to information encoding. Yet there is often implicit valuation in the use of the term “digital” as an adjective that suggests better, advanced or high-tech. Digital technologies are not inherently better than analog ones, though oftentimes they are uncritically accepted as just that.

Recently, I was reminded of when I turned against the adjectival “digital”—or at least, when I began to see it as an empty promise. In the early 2000s, a friend in Manhattan “upgraded” to Time Warner Cable’s new digital cable service. This was in the early days of bundling: in exchange for an increasingly expensive monthly fee, he could subscribe to digital cable featuring music channels, high-speed Internet, and phone service. I was struck by how unreliable the digital signal was: the Internet crashed, the phone went dead, and the TV image frequently froze or broke up into blocky color patterns. Meanwhile, my own apartment’s old school analog cable from the same company worked just fine. At the time digital cable was a novelty with kinks that hadn’t yet been worked out yet, like the premature launch of a buggy Beta version of a new platform.

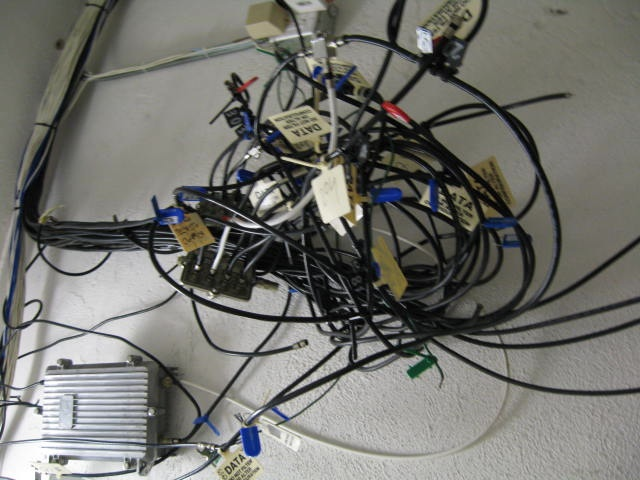

However, digital video has made little progress over the past decade. We’re all too familiar with the recurring its failures: DVD-Rs that cannot be read, dirty Netflix discs that freeze, academic talks derailed by PowerPoint or DVD menus, satellite TV signals that disappear due to atmospheric interference, screenings foiled by faulty projector connections, streaming videos that halt or have been deactivated. This trend came full circle when I recently moved into a 1920s apartment building in Hollywood and subscribed to Time Warner Cable with the HD tier and a DVR. Although we are supposed to have entered the age of high definition and digital broadcasting by now, the interference on my digital cable service is the worst that I’ve ever seen—and this in the heart of the entertainment industry.4 Too many channels and signals are compressed and forced through insufficient wiring, while the remote and cable box seem positively reluctant to communicate with each other. The image breaks up, and the sound drops out just about every minute. Now if I’m watching a screwball comedy recorded from TCM or a catty reality TV confession on Bravo, I can no longer expect to be able to hear all the dialogue. This is progress? It feels as though the heralded digital revolution was forced upon the people, rather than the other way around.5

I’m not against digital technologies per se; I’m addicted to as many digital devices and modes of communication as the next person. But I am skeptical of the marketing ruse and theoretical hype that digital technologies always improve upon existing analog ones. Machines break, and signals drop out. The underlying digital code does nothing to ameliorate that fact of life. If anything, it exacerbates it. We need to think of “digital” as a neutral, boringly informational adjective, not as a superlative.

Adverb

Okay, so I’m fudging my semantic framework quite a bit here, but how might we modify the meaning of digital’s adverb form—digitally—to understand audiences’ choices and actions? How do audiences use digital media? The tension between marketed innovations and everyday adoption has perhaps most tellingly been exposed during the past few years. While the content and electronics industries have been pushing the rollout of HD TVs and Blu-ray, the technologies that consumers have more voraciously adopted have been anything but high definition. Instead, it’s the low resolution mobile and access technologies of YouTube and cell phones that have spurred nearly instant adopters. The convenience of immediate access and the exchange of free media (though the hardware is anything but free) have far outstripped enthusiasm for HD. Yet again, this suggests that technologies are adopted in unpredictable ways that defy the industry’s intentions and goading.

Maybe this dialectic of low resolution/high definition suggests that “digital” has already changed its meaning and its connotations to have less to do with medium specificity or formal considerations and more to do with infrastructures and behaviors. Among young viewers, in particular, there seems to almost be an indifference to aesthetics in favor of finding media how and when and where they want it.6 I suppose if you’re texting, emailing, IM-ing, surfing the web, and sipping your Starbucks while watching TV or streaming a feature film on YouTube, it doesn’t matter what the video looks like or if it keeps stalling to load or if it’s segmented into a dozen separate clips because you’re multi-tasking. This, apparently, is how the digital generation consumes content now. Although I’ve never been a “glance” TV viewer (when I watch TV, I watch TV), I was struck while teaching film and media theory this past spring that prior TV studies theories of distracted viewing may be newly relevant for reconceptualizing how audiences watch now.

The appeal of watching “digitally” for many users, then, is not about optimal images but instead about accessibility and, possibly even expectations of technical difficulties. This raises questions, then, about which of our cinema and media studies frameworks actually give us the language to think beyond “the digital” to the ways audiences now watch digitally.

Image Credits:

1. The 1s and 0s of Binary Code

2. Noun: A person, place or thing.

3. Neon Adjective

4. “Wookie Here! Save the Adverb!”

5. Coaxial Cables, photo by author.

Please feel free to comment.

- Dictionary.com offers one meaning for “digital” as a noun: “10. one of the keys or finger levers of keyboard instruments.” I’m pretty sure that I have never heard anyone use the word this way. [↩]

- I’m not targeting specific new media theorists here so much as responding to a recurring tendency toward abstraction or casual generalization about digital technologies within and beyond media studies. Some of the early work in the field, such as Sherry Turkle’s conceptualization of the performance of identities online, seems more useful than ever in the age of social networking and Second Life, while later historical work by Lisa Gitelman and others have challenged the premise of newness and insisted on the materiality of the hardware. [↩]

- D.N. Rodowick has offered perhaps the most exhaustive interrogation of medium specificity and anxieties about the meaning of cinema in the digital technology age in The Virtual Life of Film (Cambridge: Harvard University Press, 2007). [↩]

- In 2008 the City of Los Angeles sued Time Warner Cable for its poor customer service. See http://articles.latimes.com/2008/jun/05/business/fi-cable5 [↩]

- Obvious connections can be made here to the controversial digital broadcast conversion, and I encourage comments on the topic. See also Lisa Park’s Flow column on the topic: http://flowjournal.org/?p=2266. [↩]

- HD TVs, which excel at brightness, contrast, and color saturation, may be mesmerizing for spectacular images but do little to improve the low-res everyday. [↩]

Lucas, You make some really excellent observations here–I especially like your point about the digital “revolution” being forced upon the public through marketing, etc. As Time Warner and other media providers push for us to adopt digital technology and become dependent upon it, how do we resolve that with their simultaneous push for net neutrality?

Laura, perhaps I’m misunderstanding your comment or your use of the phrase, but could you clarify what you mean by media providers pushing for net neutrality? While Google has spoken in favor of net neutrality, companies such as Time Warner have proposed and attempted to implement price tiering systems which are in complete opposition to net neutrality, and as a general rule Time Warner and other media conglomerates aren’t pushing for net neutrality. It is a rather ambiguous term so maybe I’m just misunderstanding what you were referring to.

Jac, I meant pushing AGAINST it–that TW and others are fighting to charge more per usage, while at the same time encouraging more usage.

Ahhhh, that makes sense; thanks for clarifying.

Thought-provoking. Perhaps the most resonant claim, for me, is the railing against the need to “ontologize” backwards, creating a touchable, lovable “digital” evolution. As the author infers, we should always be wary of simple teleology – and in this case, who exactly might be shaping the rhetoric of the digital turn. I know that Sandy Stone, writing contemporaneously with many of the early cyberculture cowboys (all implications intended), was prescient in pointing out that not everyone gets to experience the ecstatic mind/body split that digital technologies would supposedly afford us so easily, drawing parallels with privileged, Euro-centric, male-dominated Enlightenment thought. There are indeed troubling connotations to the nounification of this word. Astute observations!